What engineering metrics should you use?

What are the things that really matter to your organization when it comes to software delivery? is it speed? Quality? Overall Business Impact?

In a 2023 UK based survey, the public along with software engineers agreed that prioritizing speed and efficiency, is one of the least important factors when using computer systems. Only 22% agree that this is a high priority.

Instead, the public cares most about data security, data accuracy and preventing serious bugs.

Google’s DORA team continues to pushes the use of metrics that prioritize speed and efficiency to measure the delivery performance of software teams. This is in contrast to the public, who cared far more about “delivering work that is highly reliable” 51% or “ensuring the data is kept safe” 47% as their top set of priorities.

So why is there such a push for speed when it seems to contradict what the public actually wants?

To ensure reliability and security and to stay afloat as a company, you need to be efficient and understand where you can continue to improve. This is where metrics and metrics frameworks really start to come into play.

So lets dig into some of the modern metrics frameworks that continue to grow in popularity but can be quite misunderstood.

Dora Metrics

Of course, the place to start is the DORA metrics. Love them or hate them… they are here to stay so lets try and make the best out of them.

The (DevOps Research and Assessment) or DORA metrics framework was developed as part of a multi-year research project aimed at understanding how to differentiate high vs low performance in technology development and delivery.

The origin of DORA is closely tied to the work of Dr. Nicole Forsgren, Jez Humble, and Gene Kim, among others, who sought to measure and analyze practices and capabilities that drive high performance in technology organizations.

Their research began around 2013, culminating in the annual State of DevOps Reports, which have become a major player in the DevOps community for benchmarking and understanding what practices lead to high performing teams.

The DORA metrics aim to provide a clear, measurable way of assessing the effectiveness of development and operational practices in software delivery…or at least they try…

I’m sure you have at least heard of the DORA metrics and your organization is either tracking them or has at least talked about it.

So is this a good thing? Not really…. its not nearly good enough to actually run a good engineering organization. But before I get too far down that road… lets talk about what they are and why so many people seem to care about them.

What are they?

Time to recover - How long does it take to fully recover from a production incident.

change failure rate - How many deployments fail or need to be rolled back.

lead time for change - How long does it take code to make it to production once a developer commits code.

deployment frequency - How often is a successful deployment made.

These are all pretty easy to understand and can be easy to track, if you can decide on the methodology for each of them. This can be a bit trickier than you might imagine…

What exactly is an “incident”

This starts to lean into some of the problems that I have with these metrics. They are incredibly easy to manipulate.

I worked with a client recently on a DevEx assessment. They proudly showed me their dashboards and just about every team was average or high performing. As I started to talk to their development teams, it became very clear that they had a 48% failure rate on their CI/CD pipelines. The calculation methodology they used for Change Failure Rate just didn’t include pipeline failures somehow.

Let me spot you right now… put down the keyboard… Yes, I know this is not the fault of the metric framework but the methodology to calculate it. I get it, but lets look at another example.

This time lets look at deployment frequency. What would stop a team from doing a deployment every day, just to do one? It would actually decrease their overall velocity but would make the metric look good.

This is where Goodhart’s law really starts to show itself.

Goodhart’s law is an principle that states, “When a measure becomes a target, it ceases to be a good measure.”

If you try and take any of the Dora metrics and use them to measure a team, you will get teams that will do what it takes to make the metrics look good. This will include all kinds of weird things like deploying many times a day or not reporting incidents.

My other big question is, who are these metrics actually for? The CTO, Eng. Manager, Dev team? They just really don’t give you enough information for really anyone. They feel like a report card at the end of the school year. Great I deploy every 3 days!!! Is that good??? Maybe but also maybe not.

I think its safe to say that the Dora metrics are not enough. So what else is out there?

SPACE Metrics

This is where the SPACE framework comes into play. This is a far more comprehensive set of metrics or should I say guidelines. Let’ walk through the framework and understand each of the sections.

Satisfaction and Well-Being

This dimension focuses on the happiness, contentment, and overall well-being of the team members. It recognizes that the satisfaction of software engineers is crucial for long-term productivity and creativity. High satisfaction levels are often correlated with lower turnover rates, higher quality work, and greater team cohesion.

Calculation Methodology

Surveys and Questionnaires: Conduct regular, short surveys to quickly assess the team's mood and identify any immediate issues affecting well-being.

Performance

This aspect measures the output of work that teams produce, which can include features, code, documentation, etc. Performance in the SPACE framework is often considered in terms of efficiency and effectiveness in delivering valuable outcomes to customers or stakeholders.

Calculation Methodology

Outcome-based Metrics: Measure the outcomes of work, such as features delivered, bugs fixed, or user stories completed within a sprint or release cycle.

Value Delivery: Track metrics that reflect the value delivered to the customer or business, such as revenue impact, customer satisfaction scores, or usage metrics of released features.

Activity

Activity refers to the actions or tasks that engineers undertake during the software development process. This could include coding, reviewing code, managing projects, etc. It's important to measure activity to understand where time is being spent and how processes might be optimized for better productivity.

Calculation Methodology

Quantitative Tracking: Utilize data from tools like Git to track commits, pull requests, code reviews, and other quantifiable activities.

Data Analysis: Analyze time tracking data to understand the time spent on different types of activities, ensuring to differentiate between dev work (coding, designing) and other tasks (meetings, emails).

Communication and collaboration

This dimension acknowledges the importance of effective communication and collaboration within and across teams. It includes aspects like how information is shared, how decisions are made, and how teams work together to solve problems. Good communication and collaboration practices are essential for coordinating complex software development efforts.

Calculation Methodology

Data Analysis: Analyze communication patterns using tools that map out interactions within and between teams (e.g., email, chat, issue trackers) to identify bottlenecks or silos.

Collaboration Quality: Use surveys or feedback tools to assess the quality of collaboration, including how effectively teams are working together, sharing knowledge, and resolving conflicts.

Efficiency and flow

Efficiency relates to how resources are used to achieve outcomes, aiming for minimal waste and optimal use of time and effort. Flow refers to the state where teams can work smoothly without unnecessary interruptions or delays, often achieved through practices like continuous integration and delivery, and minimizing work in progress.

Calculation Methodology

Cycle Time and Lead Time: Measure the time it takes for work to move from initiation to completion, including any wait times, to assess flow efficiency.

Work in Progress Limits: Track and optimize the number of tasks being worked on simultaneously to improve flow by reducing context switching and focusing efforts.

That’s a lot… I know…

As you can see, there is not a lot of definition to these. To call them metrics is a bit of a stretch. They are more like buckets that a lot of other metrics can be put into.

I really like this methodology and framework overall but it take a lot of time and effort to iron out the details that work for an organization and fit their overall goal. This can take lot of time and expertise to determine the right methodologies for each of these.

The thing I really like about these is they are harder to manipulate an and each section has another section that will balance it out. For example, the Activity section can easily be gamed, but if you do this, other areas will suffer. This is a natural way to balance out true efficiency and not just having a dashboard that looks good.

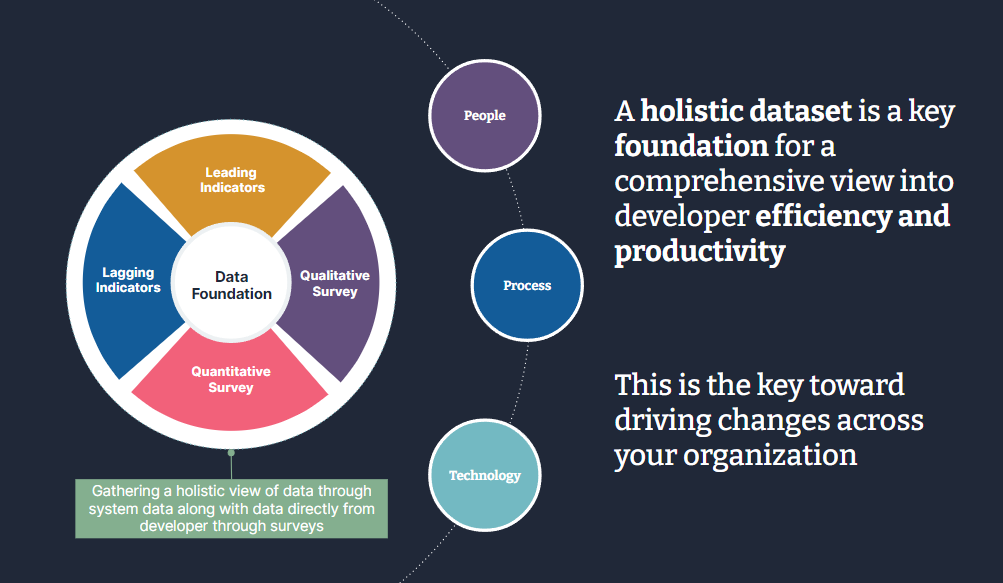

Another major problem with this framework is that it relies upon a solid data foundation. If you don’t have a good survey tool and data pipelines from many different engineering systems, you are really going to struggle to implement these in a lot of ways. There are a lot of tools out there that can make a this data gathering a lot easier.

If you’re interested to learn mor about this or need help, feel free to reach out to us at info@thinkbigcodesmall.co

So are the SPACE Metrics good enough? They can be for some but we are still missing a key component to things… Audience.

Choosing the right audience

The SPACE metrics are a really good base framework to use for an engineering organization. That said, I have not met a lot of CTO’s that want a bunch of different metrics to understand how things are going. They usually want one number.

Providing the SPACE metrics as backup details is a great plan but lets look at some good metrics that could be used for this type of audience.

Developer Productivity Index - This could be based upon a combination of space metrics to give a directional point of view.

Business Outcomes Alignment - How much of the work being done is allocated to strategic work vs technical debt or bug fixes.

Continuous Improvement Index - How much efficiency has is being gained across the organization through optimization efforts.

Tech debt - The amount and impact of technical debt across the organization

Engineering Satisfaction - How happy is the development community with the overall developer experience.

These are just a few examples that could be used for this level of reporting. Again, these might be based on other metrics from the SPACE framework but are repackaged in ways that are useful to the audience they are designed for.

They have to be broken down into many different depending on who the audience is the same should be done for each of the different levels across an organization.

Conclusion

There is no one size fits all or silver bullet that can make this super easy. Its about building the right culture, processes, and continuously striving to make things better.

For any of these types of metrics or productivity initiatives, you have to do them with the community not to them… or you can very easily ruin your culture and lose your best people.

Let me know what you think. Are you tracking the Dora or Space metrics? Are you getting value out of them? What other metrics are you focusing on?